About Me

Hi, I'm Cathy (or go by my legal name: Chengyu, which is a little tricky to pronounce). I am a senior undergraduate student at Colby College majoring in Computer Science (AI concentration) and Mathematical Sciences, with a minor in Statistics.

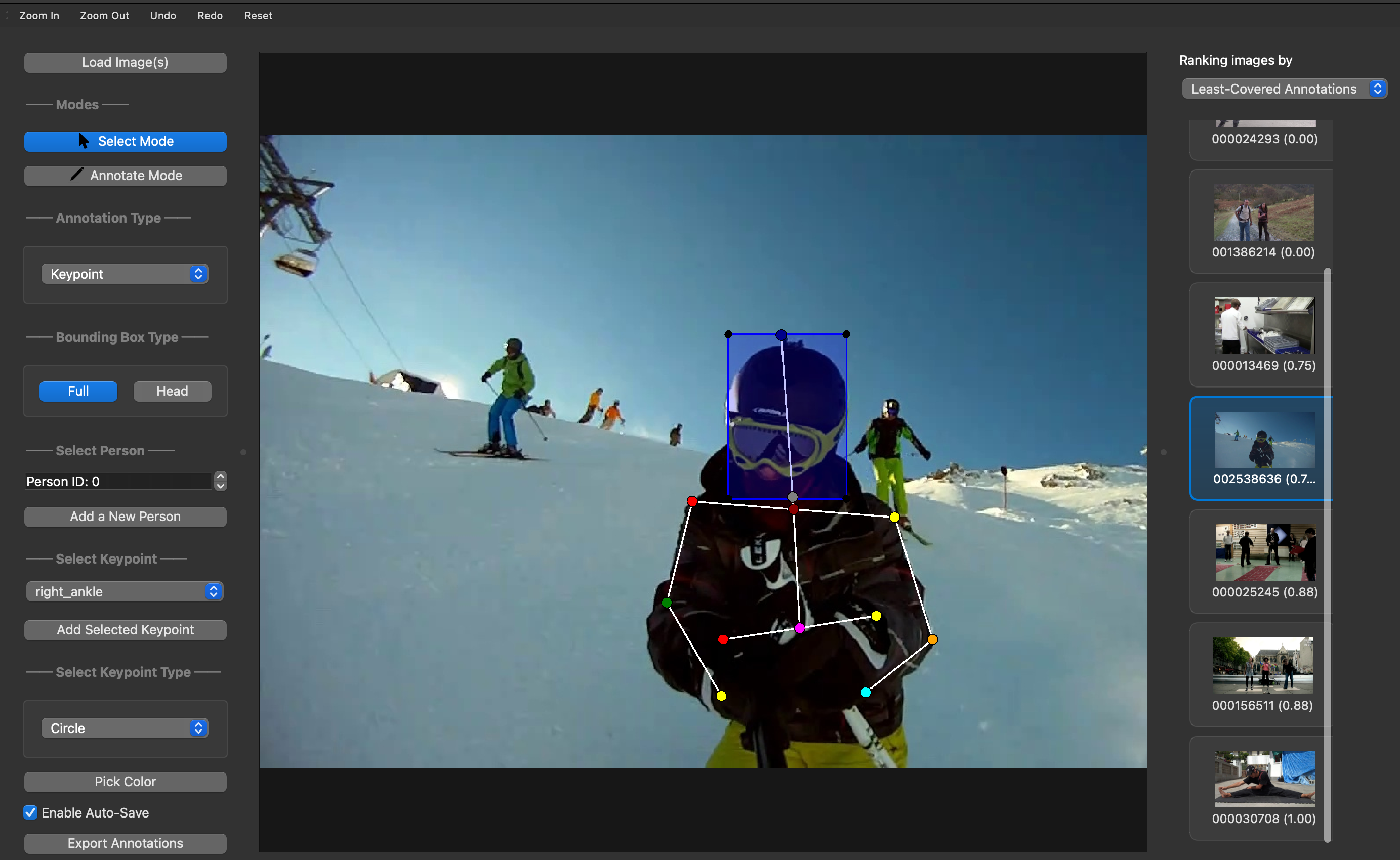

My current research focuses on improving the reliability and robustness of human pose estimation systems by tackling two challenges: annotation quality and edge cases in training data (e.g. occlusion) under the supervision of Dr. Tahiya Chowdhury in HUMANE Lab. This project, which I will extend into my senior honors thesis, contributes to multimodal interaction by improving how systems interpret and respond to complex human body movements. In addition, I am also working with Dr. Stacy Doore on machine learning methods for generating accessible art descriptions in museums for people with visual impairments.

I'm also grateful to have had the opportunity to work on research projects in computer vision, LLM-driven agent simulation, and multimodal interaction for conversation analysis under the guidance of Dr. Amanda Stent , Dr. Michael Yankoski , Dr. Trenton Ford , and Dr. Veronica Romero .

Looking ahead, I am seeking a PhD position starting in Fall 2026, where I hope to further explore research in computer vision, multimodal AI, responsible and explainable AI. If you are a faculty member, or know of an open position in a lab, I would love to connect.

Research

Pose Estimation and Responsible Multimodal Interaction

Currently integrating pose estimation techniques with ethical AI principles as part of an ongoing research project in Colby's HUMANE Lab, including proposing an occlusion index for robustness assessment.

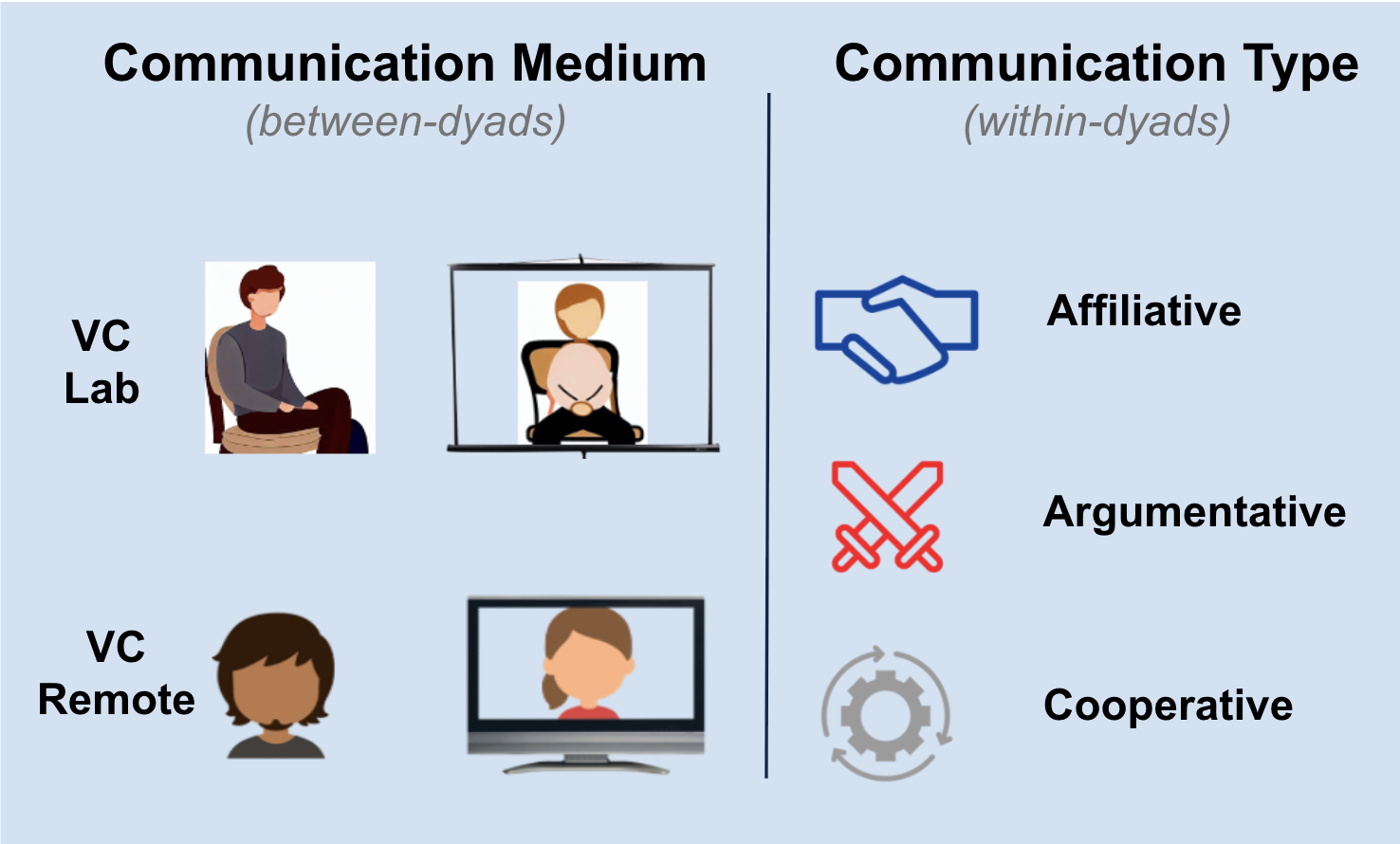

Movement Coordination in Dyadic Conversations

This research, combining computer science and psychology, adopted methods such as Cross-recurrence Quantification Analysis (CRQA), Dynamic Time Warping (DTW), and Convergent Cross Mapping (CCM) to quantify human movement coordination.

Comp-Husim: LLM-based agent simulation

At Colby's Davis Institute for AI, I helped build a persistent multi-agent simulation platform (Comp-HuSim: Complex Human Simulation) using large language models to simulate agents with digital personalities.

AI for Environmental Sustainability

Developed a drone image dataset (~1k images) and trained a computer vision model to detect coastal litter in Maine, achieving ~92% accuracy and supporting local recycling efforts.

Publications

Note: * indicates equal contribution